The RoadBotics R&D team has been hard at work making sure our reconstruction technology will be compatible with these new media sources. We’re happy to report that this work has been largely successful so far!

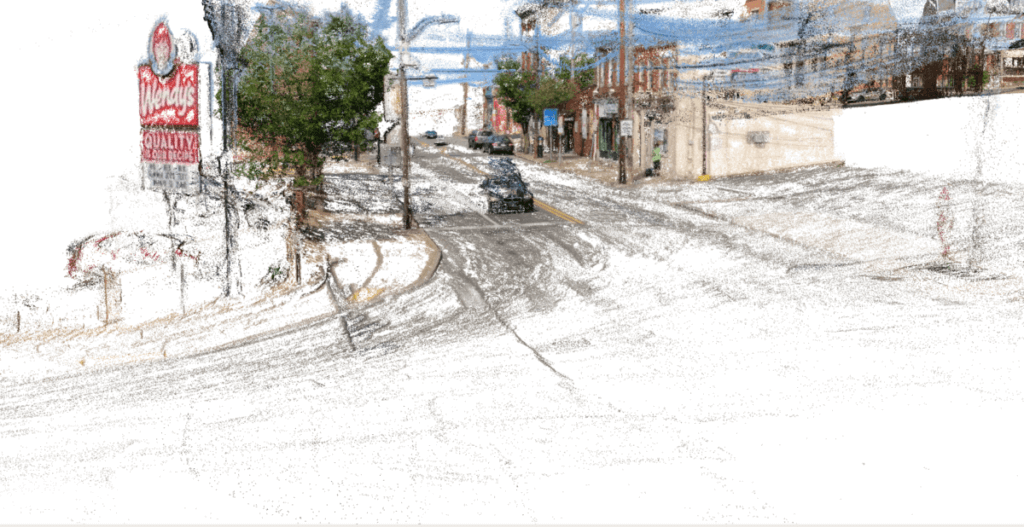

Here are results we can get from one of our most exciting new data sources – GoPro data. This is a slice of video taken by our Product Manager Todd using an off-the-shelf GoPro Max. For those of you familiar with the Pittsburgh area, this bit of video goes from the 40th Street Bridge in Lawrenceville over to Main Street. This video was in no way optimized for high-quality 3D reconstruction, but was a casual GoPro collection taken while driving.

This is what individual frames of that video look like taken in isolation:

This kind of image is commonly called a fisheye image because it’s taken with a fisheye lens. Our older 3D reconstruction pipeline was built to mostly accommodate standard smartphone videos, so it needed to be adjusted to properly deal with fisheye imagery.

Once those adjustments were made, we were able to produce 3D reconstructions like the ones in our previous posts.

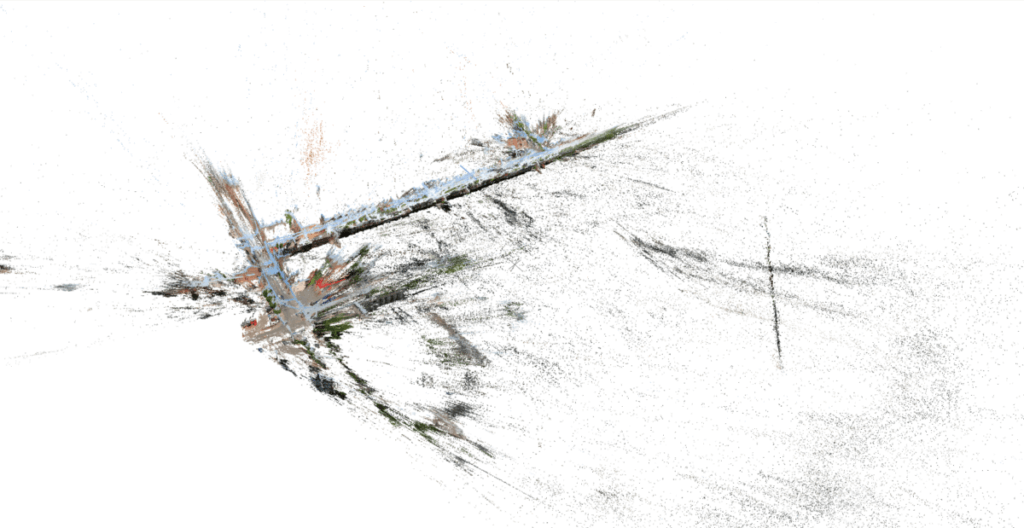

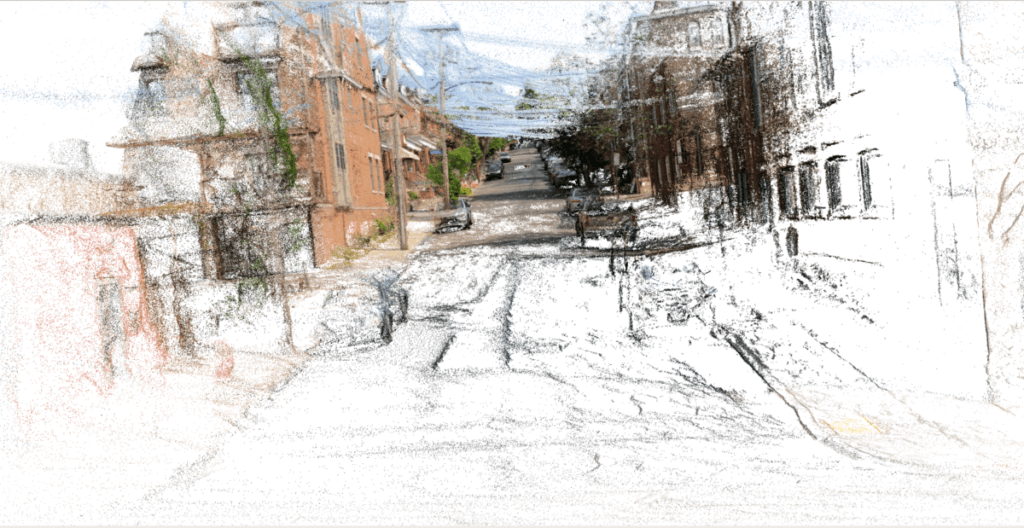

This is what the reconstruction looks like at first:

You may also recognize some of the artifacts and oddities in this reconstruction from our prior R&D posts, and indeed this reconstruction needs some cleaning up.

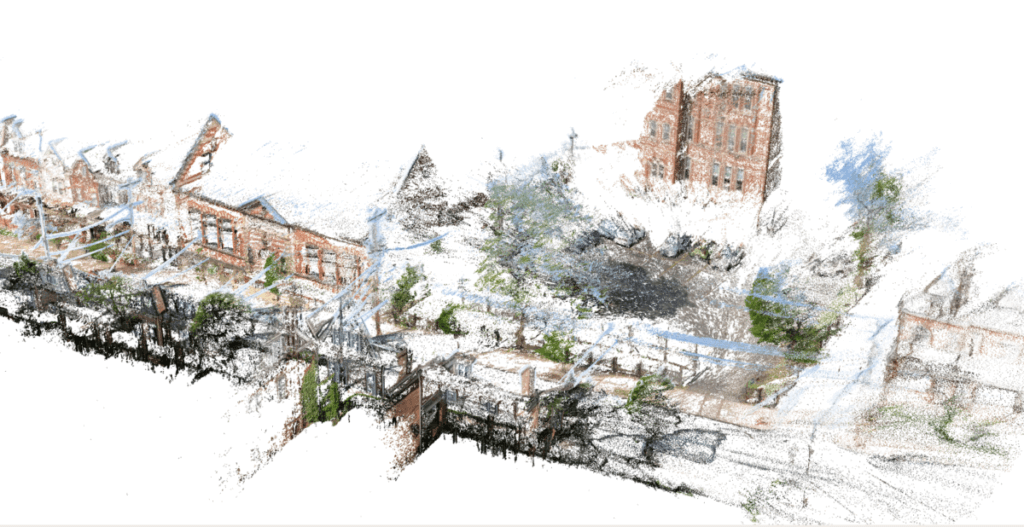

One of the most straightforward ways we’ve developed to clean up reconstruction models is by what we call a ‘moving envelope’ process. The idea is pretty simple. We figure out where in the 3D model the camera was at each individual image captured to make the model, and then we define a real-life radius around the camera (for this image, about 20 meters around the camera). Next, we cut out all the points in the reconstruction that fall outside the 20 meter range of any of the cameras.

We end up with a 3D model that closely envelops the original camera positions. The result is a reconstruction that similarly follows the path of the original imagery.

The reconstruction quality from a GoPro Max is surprisingly good. Here are some of the most remarkable captures we extracted directly from the 3D model.

What’s more, we can extract some very interesting information using our existing AI models and the reconstruction.

Stay tuned for our next blog post where we show you how using 3D models can lead to automated asset extraction!