Road conditions in the United States are in steady decline, a point emphasized by the “D” grade they received in 2021’s American Society of Civil Engineers (ASCE) Infrastructure Report Card. Issues such as aging infrastructure, inadequate funding, and increasing traffic demands are major contributors to road deterioration and key drivers of innovation in this space.

A number of factors influence how quickly and to what degree road maintenance can be improved. One of the greatest challenges facing road managers today is being able to easily identify which roads need repairs and where those roads are located.

Current methods of assessing roads, even when following a standardized system, leave much to be desired. Advancements in technology like artificial intelligence (AI), and more specifically machine learning (ML), present opportunities to resolve these challenges and improve road assessment methods.

Since being developed at Carnegie Mellon University in 2016, RoadBotics by Michelin has used proprietary ML algorithms to assess over 49,000 miles of road, earning a reputation as a leader in automated road assessments.

The Inadequacies of Current Road Assessment Methods

Several road assessment methods exist and range from manual approaches to advanced high-tech solutions, and in some cases, no method at all. However, each of these methods has its limitations and inadequacies.

Many communities rely on manual inspections. Assessing roads using this approach consists of having a public works director or civil engineer visually inspect all roads in their community. Even for communities with small networks, these inspections are time consuming, subject to the engineer’s judgment, and prone to human error.

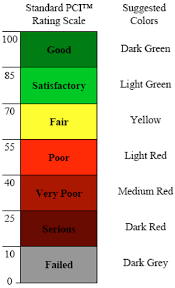

Using a standardized method of inspection offers opportunity for improvement, but often trades accuracy for speed. For example, the Pavement Condition Index (PCI), an accepted standardized method, relies on sampling small portions of a road network to make decisions regarding the entire network. This speeds up the assessment process, but sampling a non-representative section of road leads to misinformed maintenance strategies and missed opportunities for impactful repairs. Pavement Surface Evaluation and Rating (PASER) is yet another standard that exists, but like all other manual inspection methods, takes time to perform and training to perform correctly.

Unfortunately, the time-consuming and tedious nature of manual road assessments means most road owners do not have the time to assess all of their roads. This further perpetuates the use of sampling or multi-year gaps between assessments, despite the fact these lead to skewed results and poor use of maintenance budgets.

Many technology-assisted methods of road assessment that are capable of surveying an entire road network in a timely fashion require expensive equipment and extensive training. International Roughness Index (IRI), typically relies on an inertial measurement device attached to a vehicle to measure vertical movement of the vehicle as it travels over the road (i.e. the “roughness” of the road surface). This allows for faster assessments, but if the vehicle does not hit a pothole or other road distress, it is not detected. Alternatively, scanner vans use many sensors (LiDAR, radar, thermal cameras, high-precision GPS, etc.) to capture the state of road pavement. However, the format and sheer volume of data recorded requires special software and a trained professional to interpret it, not to mention the extra cost of outfitting and maintaining a vehicle with these expensive sensors.

In the absence of a fast-yet-cost-effective method for road assessments that does not sacrifice accuracy, many communities rely on a more reactive approach, fixing damages like alligator cracking or potholes, as they are reported. Unfortunately, once damage is severe enough to get the attention of the general public, it is often far more expensive to repair than if it was caught at the first sign of wear. In this way, poor road assessment methods can end up costing a community even more time and money.

How Does RoadBotics By Michelin Apply Machine Learning To Solve the Road Survey Process?

Contrary to first impression, the intention is not to replace the engineer surveying the roads with a ML model. Instead, it seeks to apply years of research regarding the challenges facing road assessments to create a tool that makes road assessments less time-consuming and tedious.

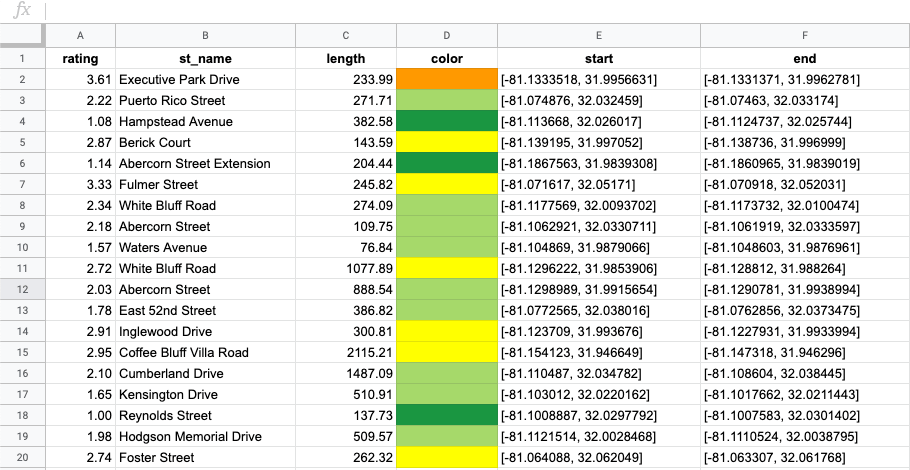

It starts with a convenient data collection process. A regular smartphone, mounted on the dashboard of any vehicle and running the RoadBotics data collection app, is used to record GPS and high-definition video data as a person drives down each road in the network being assessed.

This method of data collection allows for roads to be assessed at driving speeds – much faster than manual surveying and similar to scan-van data collection. It does not require any special equipment – just a smartphone to record the data and another to provide customized turn-by-turn directions.

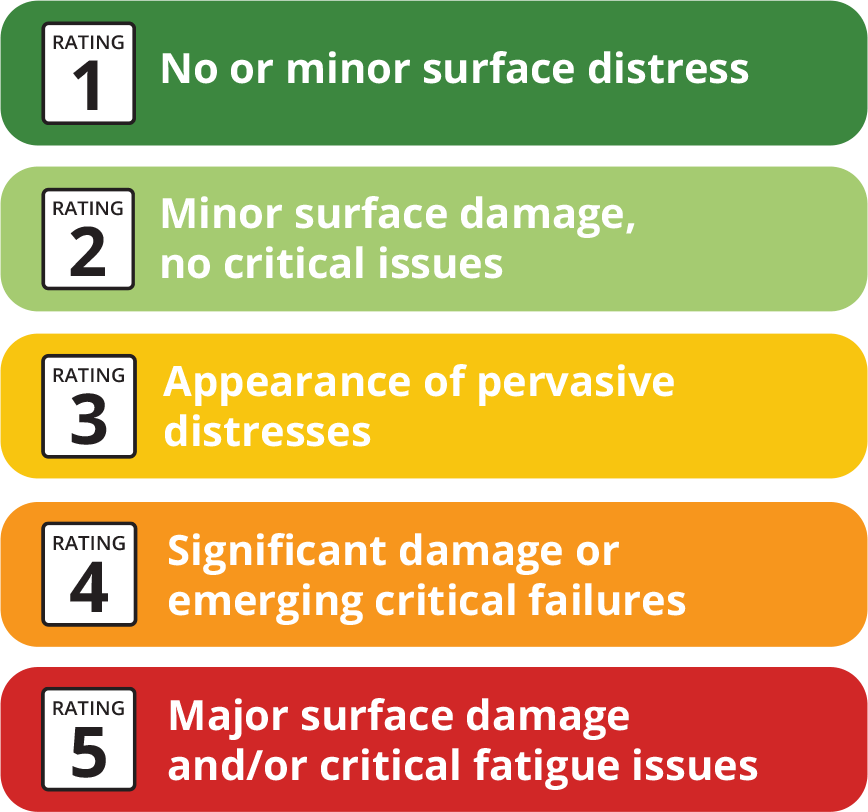

Once collection is complete, data is uploaded to a secure cloud platform. RoadBotics’ ML model categorizes each 10 foot section of road on a rating scale of 1 to 5. Sections rated a 1 are in perfect condition, and those rated a 5 are in the worst condition. The end product from an assessment is an interactive and color-coded map of roads and their ratings.

Establishing Trust in Machine Learning Based Road Assessment

While this sounds great, many prospective clients ask how they can trust a deep learning model. More specifically, how does it determine a rating for each 10 foot section of road? Data scientists researching deep learning are well aware of the intricacies involved in addressing this particular question.To lend some perspective, there is an entire field dedicated to active research focused on developing deep learning models specifically to offer explanations for their reasoning.

So how can such a model be trusted to provide accurate road assessments if it cannot tell us how it makes the assessment? First, when developing ML models, RoadBotics by Michelin uses standard methods in machine learning to check performance. Second, they compare its performance to that of humans on the same task. This second step points to a, perhaps, uncomfortable truth: humans are not perfect. But it reveals that an ML model does not need to be perfect to be helpful in making the work easier and more efficient. If it helps civil engineers focus their valuable time and attention on the roads that need it most, it is a net gain.

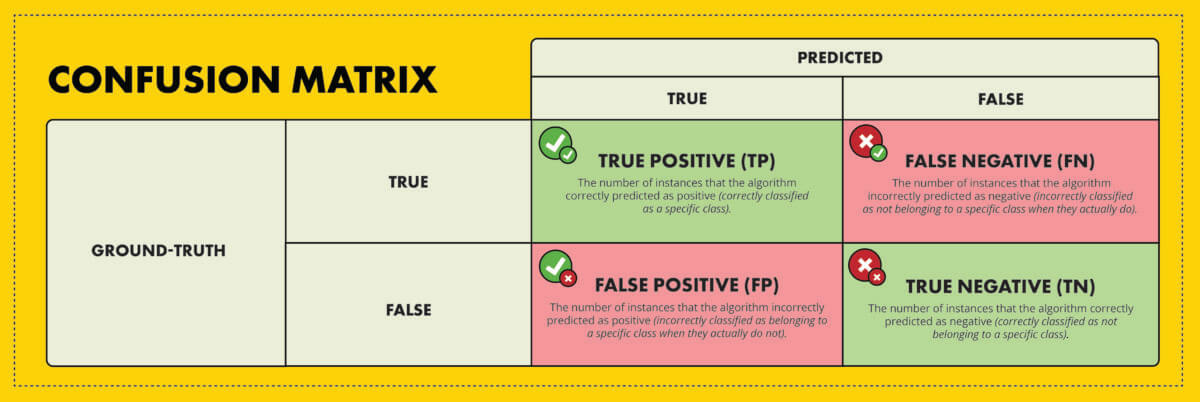

After training was complete, they tested the model using the test set to determine performance. As is standard in machine learning, they used a confusion matrix, as well as precision and recall to quantify model performance.

A confusion matrix is a square matrix where the ground-truth classification for a set of data is plotted along one axis and the model’s predicted classification for that data is plotted along the other axis. Each location of the confusion matrix counts the number (or proportion) of instances in the data that fall into each category. Here is what a confusion matrix for a binary classification (i.e. true or false) looks like:

By analyzing the values in the confusion matrix, various performance metrics can be calculated, such as:

- Precision: The proportion of true positive (TP) predictions out of all positive predictions (TP + FP) made by the machine learning algorithm. A high precision score indicates that the algorithm has a low rate of falsely classifying negative instances as positive.

- Recall: The proportion of true positive (TP) predictions out of all actual positive instances present in the dataset (TP + FN). A high recall score indicates that the machine learning algorithm is effective in identifying the majority of positive instances correctly.

These metrics, along with the confusion matrix, are regularly used in data science and ML to determine an algorithm’s performance and diagnose problems during development. In most cases, the goal is to minimize both false positives and false negatives. This helps to achieve a well-balanced and reliable model that accurately classifies real-world data.

When looking at these metrics, as well as how often RoadBotics’ ML model’s ratings were off by 1, 2, 3, or 4 from the ground truth, they found that the model is able to perform better than the individual experts did in their study mentioned earlier. To provide an additional level of certainty, they tested their model on data that was not yet available during the time it was developed.

This is the ultimate test of real-world performance. The model’s performance metrics were as good as those of the experts from their study, and in some cases were even better.

Blending Technology and Human Expertise is the Future of Road Maintenance

While a granular view of roads can be helpful, more data is not always better. Beyond a certain point, it is unlikely that a higher volume of data will lead to different final decisions. It is important to consider this reality when creating technology to improve road assessment methods. Road managers do not repair their roads in 10-foot sections since the time, effort, and money required to perform maintenance is more efficiently used by working on larger sections of road. Using their proprietary AI, RoadBotics by Michelin ensures a thorough assessment through rating every 10 feet of road, but does not overlook how maintenance is executed in the real-world.

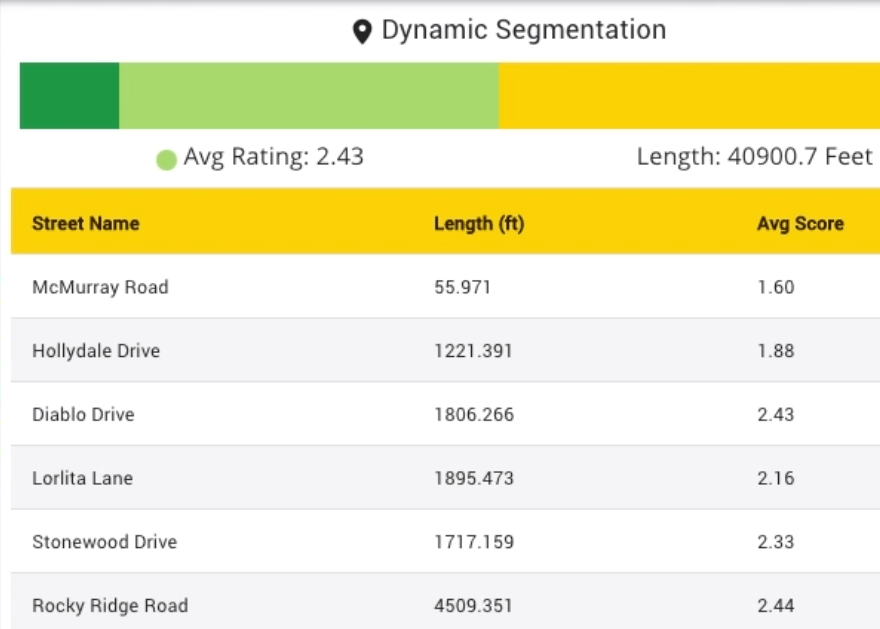

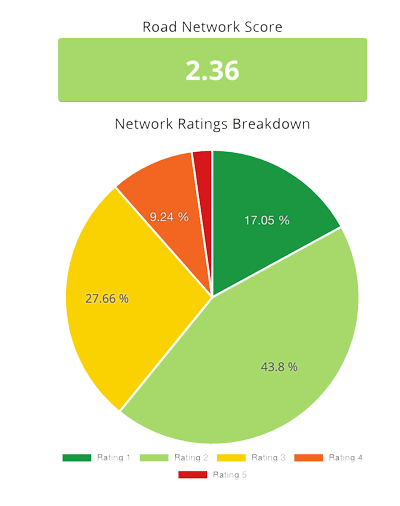

They provide an aggregate rating and other statistics for individual roads based on the assessments for each 10-foot section that make up any given road. Aggregating the data leads to even more accurate results at a resolution that road managers can take action on.

As alluded to near the beginning, machine learning models, and AI in general, are not meant to replace humans. It is meant to be a useful tool that people can trust. RoadBotics by Michelin doesn’t simply run their model and then call it a day. They have a team of people check the output of their model and occasionally make minor adjustments before delivering the final ratings to customers. This confirms their clients get accurate assessments, while creating the new data to maintain and improve their model over time.

Not to mention, the ratings themselves are not the final say. The ratings they generate are meant to help road managers focus their attention on the areas of their network that need it most.

With 4 million roads to manage in the United States, checking every one of them regularly to find the exact spots needing attention is not possible through human effort alone.

Using AI, RoadBotics by Michelin can give communities an overall view of their entire road network conditions and make pavement maintenance planning a proactive process, stopping potholes and other severe damage from forming in the first place.